Seriously Fast ArcFM Designer QA

We all need quality assurance checking. I personally can’t count the number of spell-check corrections MS-Word has already caught as I type right now.

ArcFM™/Designer designs are no different.

Even though your implementation may include AutoUpdaters to assign required field values, to establish relationships and/or set extended data values, there are always ways these may be changed or unset to make a once valid design invalid. Or to be made non-conforming to the standards you have established. And this is why we have QA.

The thing is, sometimes QA can be, shall we say, um… sluggish.

We recently had an experience where Designer QA performance was quite so. This article describes how we diagnosed performance issues and ultimately improved things. A lot.

The QA Framework

First, a little bit about how ArcFM™ QA operates. A you may know, the ArcFM™ Solution provides a flexible framework within which QA “rules” can be executed. Actually, though it might not be the most glamorous part of the solution, the ArcFM™ QA framework adds significant value over QA functionality you get from ArcMap out-of-the-box. Here are just a few of the features that come with ArcFM™ QA compared to the basic ArcMap validate command.

- ArcFM™ QA reports all QA errors that may be present for a given feature, not simply the first error.

- ArcFM™ QA provides a reviewable list of errors by feature that you can navigate, zoom and pan to. By comparison the validate command simply selects features found to be invalid.

- ArcFM™ QA allows you to export a set of errors from one session and import them into another. This can be really helpful for error documentation and for sharing the QA effort among a team of editors.

- As a framework, ArcFM™ allows you to create custom QA rules that operate within the same process as product level rules.

So, fundamentally for a serious enterprise ArcGIS implementation we can’t see how an organization can maintain data quality without using ArcFM QA – or something much like it.

An important point about how the rules function is that the QA framework iterates over each GIS object in the design, discovers which if any rules are configured to apply for the object type and then fires any rules found to apply.

Evaluation of each GIS object is completely independent of evaluation of any other GIS object. This proves essential to understanding we gain later in our process.

Our QA Case

While QA is great, and really invaluable in an enterprise implementation, the more you ask of it the slower it can get. Have to say “Doh!” when you put it that way.

In our case QA performance got seriously slow. So slow in fact that it almost made the largest designs impossible to complete, as a successful QA run was defined as a prerequisite for submitting to post, and the largest designs could take over an hour to QA.

So… recognizing a problem we dug in. The first question was, is this a performance issue baked into the QA framework itself, or are there some QA rules that take more time than others? To address this question we added instrumentation to each custom QA rule to determine total processing time consumed by each rule.

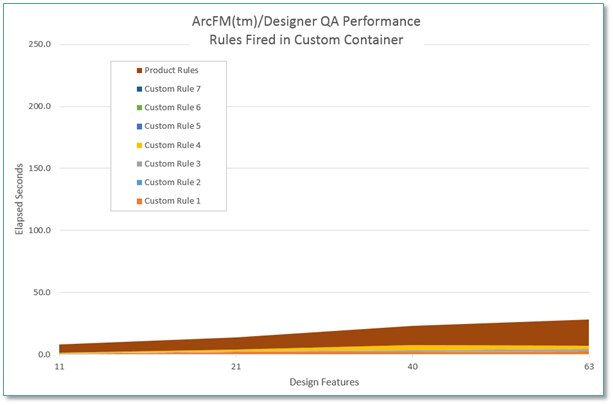

The chart below shows our initial results. First of all we saw that performance was directly related to the number of features in a design. Second, it was clear that the core product rules (dark brown band at the top) contributed only a small amount to the total QA time. And finally it was also clear that if we could find a way to improve custom rules 1, 2 and 4 (brown, blue and yellow bands) we could achieve significant improvement.

Figure 1 Initial QA Performance Timing

The chart begged the obvious question; what’s up with the custom rules?

What’s up with the Custom Rules?

Our QA case included seven custom rules in addition to the core ArcMap/ArcFM™ rules. Four of them seem to have little impact on overall QA performance. But three of them do. In fact, rules 1, 2 and 4 accounted for between 92% and 95% of the total QA performance time.

Digging further we found one thing in common with our time-consuming rules – they all involved examination of the Design Tree XML to determine a feature’s validity.

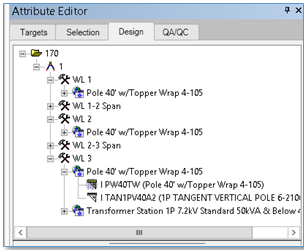

What you see in Designer’s Design Tree View are the collection of work locations, GIS features, compatible units and extended data that comprise a design. The Designer API provides a way for an implementer to get to this design tree for all kinds of purposes, though for a design of any size it may take a full second or more to retrieve.

In our case one rule was examining extended data that our implementation uses to hold accounting codes, another rule was verifying that compatible units assigned to poles meet corporate standards, and the third was testing for unique values.

The key point being that each of these rules needs to access the Design Tree for each feature evaluated. So, for example, if we have 63 features in our design as we did in the last case of our initial test, and our three expensive QA rules needs to fire for each feature then we need to access the Design Tree 189 times.

If each of those retrievals takes a second, then this would account for 189 of the 214 elapsed seconds in our 63 feature test design.

There must be Another Way

Of course there is another way. There’s always another way.

In our case the other way would have to involve getting the Design Tree XML once at the beginning of the QA process and using it for each feature instead of getting and re-getting the XML for each feature.

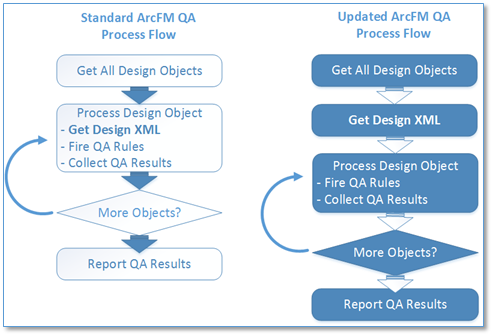

Unfortunately in our case we couldn’t find another way to execute all QA rules, both product and custom, and stay within the ArcFM™ product QA framework. Instead, we built a new QA container with an adjusted process flow so that the Design XML would be retrieved once, at the beginning of the QA process, and then used for each feature for which its required.

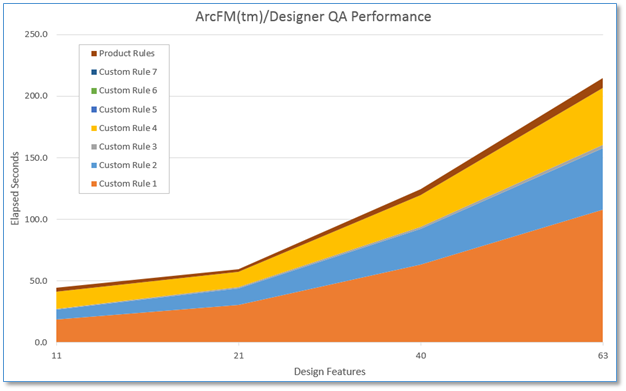

While a downside to our change was that we could not stay “inside the box” with use of the ArcFM™ QA framework, a significant upside to our change was the demonstrated performance improvement, as illustrated by the chart below. In our largest test case the QA processing that had before taken 214 seconds (~3 and a half minutes) for 63 design features now takes approximately 28 seconds.

When tested in the user acceptance environment and later in production it was found that designs which had previously taken an hour to QA were now taking approximately a minute or two.

It should be noted that the exact same logic was performed in the “Initial” and “Updated” QA processes, through the updated process needed to perform much less work to complete its task.

Summary

Quality Assurance for our ArcFM(tm)/Designer implementation were always considered critical to success. Letting data errors slip through the QA process and into the asset management system would bring corporate data quality standards into question and take many person-hours to resolve. However, the initial deployment of QA as controlled by the ArcFM™ QA Framework could take a very long time and slow design technician productivity. After examination of what our custom QA rules were actually doing, we built a new containing “framework” and executed the same rules with the same results, but with a performance improvement of over 7 fold.